Interpreting the Artist's Mind: Using LLMs as a Neural Surrogate

Through mechanistic interpretability, and drawing a parallel to Hebbian learning—the neuroscientific principle that “neurons that fire together, wire together”—this project explores whether an LLM can serve as a neural surrogate to extend an artist's cognitive process and tests if linking this extended thought to a sensory output can create a palpable sense of fulfillment. It ponders two central questions for creators in the age of AI: how can one collaborate with generative models to amplify a unique voice, rather than be lost in the echoes of others? And as AI takes on more of the 'doing' how do we fill the innate human need to tangibly create?

Framing the Questions

It’s April 2021. Sesh and I are on a road trip, stopped in a near-empty Myrtle Beach during the off-season. We find a cozy cafe to escape the sharp wind. Inside, we’re sipping coffee, admiring a huge post-modern artwork hanging on the high-ceiling wall. Our conversation starts with the piece itself, but quickly shifts to how it was made, spiraling into the familiar debate about process versus outcome. The discussion sharpens, focusing on the role technology might play in a future where the artist's hand is no longer needed. In retrospect, this disagreement sets the course for this entire project.

This abstract debate is a reflection of our own paths. Sesh is a long-time engineering leader and a multidisciplinary thinker who has competed at a national level in school and done commissioned work as an artist. Despite not actively painting now, he still approaches problems with an artist’s mind. I am an artist who has also done commissioned work in pencil and watercolor, and a self-taught engineer still wrestling with how to merge these two identities. My background in linguistics—studying how structure and symbols create personal meaning—makes me fiercely protective of nuance. Our perspectives are a blend of the analytical and the creative, and this conversation is where they collide.

Sesh lays out his prognosis. "The artist of the future," he says, "will also have to be an engineer. The physical act of painting, of executing—that can and will be delegated. To be successful, you have to be a judge with exceptional taste." He points out this isn't new, citing how masters like Michelangelo directed large workshops of assistants to execute their grand visions.

For me, this statement is jarring, threatening to turn the conversation into a real argument. I can see the cold logic in Sesh’s point about inevitability, but the idea is deeply unpalatable. My attachment to the process is personal. I feel compelled to dig for the core of this conviction. "But the process is the value," I argue. "The physical act is therapeutic. You could make the argument that a piece of art is most valuable to the artist themselves, and that value is generated directly through their participation in the process. The final product for an outsider is secondary to that experience." To delegate the process feels like a fundamental contradiction to me.

I can hold both viewpoints as valid—there's truth in each—but the question of how to reconcile them is unanswered. How do you embrace the inexorable scale of technology without sacrificing the fulfillment that comes from the tangible act of creation? The question will become more pressing as AI explodes into the mainstream. How do you collaborate with a tool so powerful that, if you’re not vigilant, it will drive you and not the other way around, until your own voice is lost in an echo of its training data?

It is July 2025, hackathon week at Datadog, I am hacking with Sesh on AI and I take one of my own black-and-white drawings and digitally cover 60% of it with green. I then use Toto, a time-series forecasting model Datadog just open-sourced, to "predict" the missing pixels. It’s an unconventional hack—a Python script that treats the grayscale values in each pixel column as a time series point. The result is crude, a ghostly statistical forecast. Shortly after, I show the experiment to Sesh. He sees a glimmer of something more profound in my spontaneous hack. "You could do this intentionally," he suggests, "start a piece and let a model finish your thoughts." I'm skeptical. The crude result from Toto doesn't inspire confidence that a model could meaningfully collaborate.

But a few weeks later, that skepticism is challenged. I’m at the kitchen counter, drawing with our 20-month-old son. He’s making a flurry of rough, energetic marks with his crayons—the kind of pure, unthinking scribbles a toddler makes. In a spur-of-the-moment impulse, I take the page and, right there with him, add a few of my own lines. In under a minute, his scribbles are transformed into the outline of a female face. The result feels fluid and alive, a seamless completion. The experience is a revelation; it clicks that this is what Sesh was talking about. Seeing this tangible example, I realize there is merit to his idea.

Meanwhile, Sesh has been discussing AI art with Grace from Lux Capital and her friend Valentina Calore, who blogs her work as "Latent Space." Sesh shows Grace my crude Toto experiment as a curiosity. Intrigued, Grace suggests I present my work at an upcoming AI Summit. Suddenly, our lingering philosophical debate has a deadline and a purpose.

Searching for a Method

So, I start thinking about how to collaborate with an AI in a way that felt acceptable to me—satisfying, not a compromise. What does 'AI' mean in this context? What kind of model could act as a creative partner? What felt most authentic was not to use AI as a pure generator, but as a completer. It’s a feeling that will be validated in October 2025, when Andrej Karpathy appeared on the Dwarkesh Podcast. He'll say his preferred workflow for coding is to start writing himself and let the AI only autocomplete, arguing that the ideal AI collaboration isn’t about full automation, but about tools that assist and elevate human work. It’s a clear echo of the idea I’m wrestling with now: I need to initiate the logic and let the AI build on it, rather than having it attempt to architect something from scratch with a prompt.

At the same time, mechanistic interpretability (MI) is capturing the community's attention. Anthropic's paper from earlier that spring, Tracing the thoughts of a large language model was all over my feed and dominated my conversations with Sesh. The idea of reverse-engineering a model's decisions is thought-provoking. As I conceptualize the art project, the two ideas meet: what if I could collaborate with an AI to complete my art, and read its thoughts as it works? It wouldn't just be a tool; it would be a surrogate—my neural surrogate. By interpreting its process of completing what I started, maybe I could, in a way, interpret my own subconscious thoughts.

Now I’m wondering what kind of AI process would be a faithful representation of my process? My own method isn't a single action; I draw iteratively, slowly building from a very rough sketch to final details. I needed to find a model and an MI technique that mirrored this.

It feels like any model that learns the process of creating a drawing as it changes over time, rather than just producing a final, static image, would be a better fit. This search for a temporal, process-oriented model leads me to stroke-based models like SketchRNN. They predict lines and curves sequentially, which feels incredibly human-like, in theory. But I quickly find that they are primarily trained on simple SVG doodles of specific simple subjects, like cats and pigs. The thought of training a custom model like that on my own complex, scanned sketches is a curious technical challenge in its own right, but my immediate goal is to find a process I can actually use to create a finished piece of art in the nearest future, so this remains an exploration reserved for another time.

Next, I look into process-conditioned diffusion models. These treat art creation like a video, moving from abstract shapes to fine detail. The ideal MI approach here seems to be using Sparse Autoencoders (SAEs), which, according to the papers I’m reading, can decompose a model’s internal activations into human-interpretable concepts like "warm tones" or "symmetry." To do that, however, you need an SAE, and I quickly find that there aren't many pre-trained SAEs for diffusion models out-of-the-box; the ones I come across are all custom. This means I would likely have to train my own. I look into it and find the process fascinating in theory. I come across research showing how this can be largely automated; as I understand it from one paper, the process would involve adding hooks to a model, running a collector on its activations to gather data, training the autoencoder, and then using other models to automatically label the learned features into a concept dictionary. It's a compelling research project on its own, but I have a couple of other concerns. First, the open-source diffusion models I test for the actual completion task just don't perform well enough for my liking. The style is often inconsistent and misaligned with my own, and worse, they aggressively override existing content unless I use context-aware fills, and that means that it's less of continuing my work and more of the model adding its own work in the patches of the canvas that I designate. The second consideration is more fundamental. The more I think about it, the less a diffusion model's process feels like a good surrogate for an artist's. In my opinion, the way it generates an image from pure noise is alien to how I, or any human artist, works. It feels like trying to interpret delirium when I am looking to interpret a thought.

Through this journey I finally arrive at a moment of clarity. So far I've been focused on interpreting the model's visual process, but my own process doesn't start there. Before my pencil ever touches the paper, I have a clear, verbalizable concept. I think, then I draw. The thought process of a reasoning model, a chain of text, is a far better surrogate for my own creative process than the pixel-level adjustments of a diffusion model.

Now I’m looking for ways to decode and interpret plans. Initially, I encountered experiments focused on tracing how the next token is predicted in LLMs—techniques like activation patching and logit lens from Anthropic's transformer circuits framework I mentioned earlier. But this feels too granular for our needs, dissecting individual tokens rather than meaningful reasoning units.

As I peruse the latest on arXiv, I discover the Thought Anchors framework. It’s a tool that analyzes a text-based chain of thought and identifies the most influential sentences—the key planning steps, the moments of uncertainty or correction. According to the paper, it attributes importance to individual sentences using a few complementary methods. These "thought anchors" are typically planning or backtracking sentences that disproportionately influence downstream reasoning and the final output, providing metrics like importance scores and dependency graphs. This seems to align with my own "conceptualize first" creative process.

Adapting the Tools

I fork the Thought Anchors repository and find it's engineered for objective use cases, like math problems, where answers are binary—either right or wrong. It's also designed for text-only inputs. My project requires the model to see an image, and the outcome is subjective and open-ended, so I know I'll need to make some changes.

My initial hope is to use a smaller, open-source model like Qwen or Llama. The exciting part here is the potential for "white-box" analysis. The Thought Anchors paper actually outlines three complementary methods to figure out which sentences are important. There's the "black-box" method, which is like testing a recipe by leaving out an ingredient: you treat the model as an opaque box, remove or change sentences in its reasoning, and run the inference over and over to see how the outcome changes. Then there are two "white-box" methods that let you look inside the model itself. The first is attention aggregation, which identifies which past sentences the model is paying the most attention to. The second, and the one I am most interested in, is causal attribution via "attention suppression." This technique is more like brain surgery: instead of changing the input text, you surgically prevent the model from "looking at" a specific sentence and directly measure how that intervention changes its subsequent thoughts.

The white-box approach feels more direct, more "mechanistic," and as an engineer, that is very appealing. So I start experimenting with smaller models where I can get my hands on the internals. But there is an issue: their actual output is not good enough. Sometimes they go against the explicit instructions listed in the prompt, which means following the plans they produced creates glaring breaks in the continuity I am looking for. I can't honestly build my project around an analysis of a creative plan that I won't actually use to create art.

In contrast, the plans generated by GPT-5 are consistently nuanced, creative, and most importantly, they make sense to me. I genuinely like them. The trade-off is that GPT-5 is a closed system. I can't perform surgery on it. The only way to analyze its reasoning is with the black-box method. So, the choice, again, is a pragmatic one.

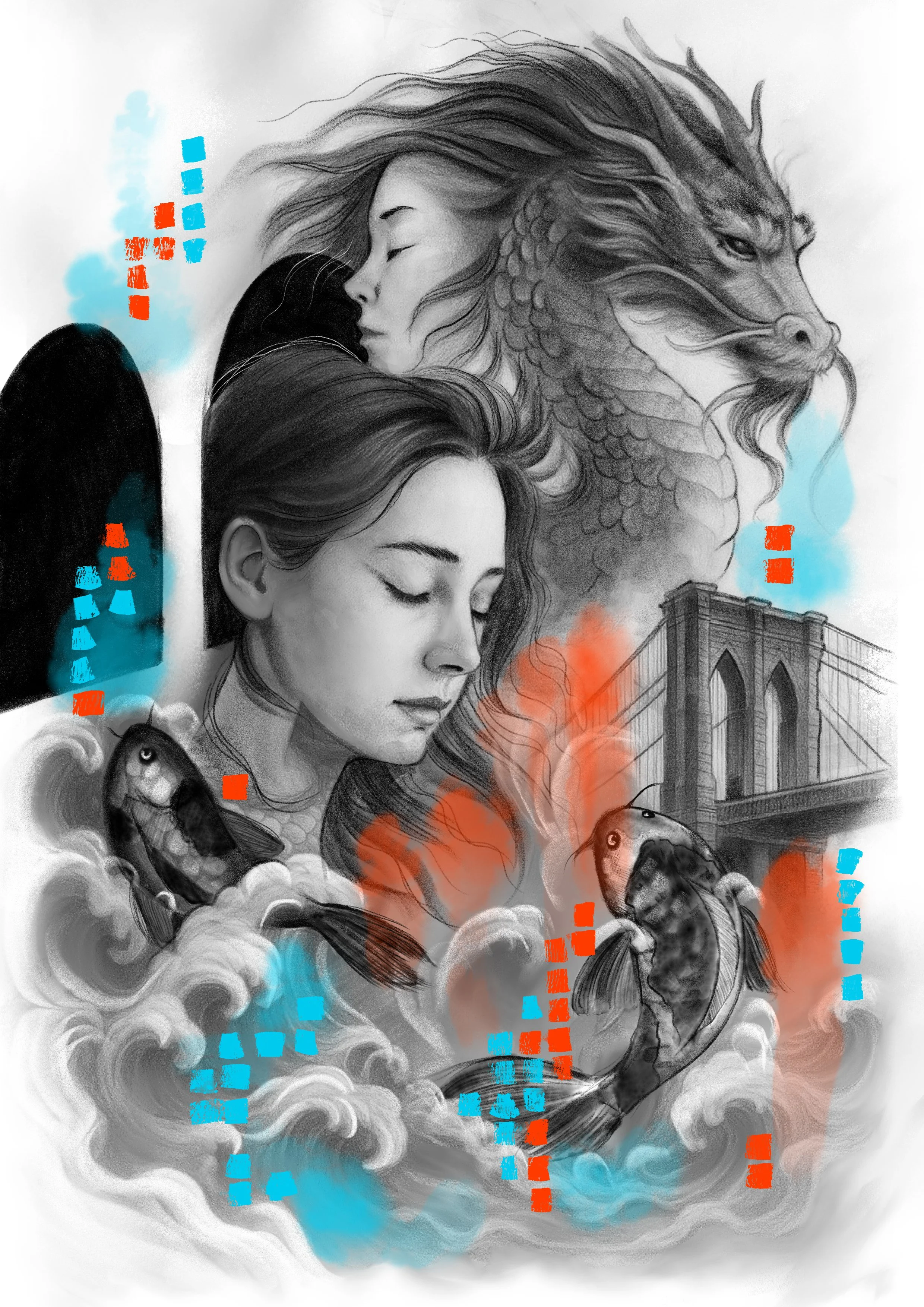

I settle on GPT-5. First, I make changes to the data pipeline to make sure I’m able to pass images to the model as part of the prompt. My prompt is a combination of three things: one of my finished drawings to act as a style reference, the new unfinished sketch for this project, and a text instruction telling the model to create a completion plan based on both.

"Analyze both images, create a detailed plan to complete unfinished.jpg in the style of finished.jpg. The architecture elements of finished.jpg are Brooklyn Bridge, Domino Sugar factory in NYC, creatures in finishied.jpg are koi turning into dragon; The architecture elements of unfinished.jpg are Coney Island, creature in unfinished.jpg is Baku (the dream eater yokai). The final image MUST be black and white. Do not bring architecture elements and creatures from finished into unfinished. Use the composition and subjects from the unfinished. These drawings capture the melancholic intimacy of being in a long-term relationship with New York City itself - depicting the city as living creatures."

(the plan generation prompt)

Next, to handle subjective evaluation, I replace the binary right-or-wrong check with a continuous quality score, using GPT-5 as a "judge" to rate the merit of each plan according to my evaluation prompt. I also change the taxonomy of reasoning categories so they are more tailored to creative analysis, like Technical Analysis and Emotional Interpretation.

“Rate the quality of this completion plan (1-10). Consider: Does the response correctly identify and describe the artistic style, techniques, and characteristics of the finished drawing (it should be black and white expressive pencil realism)? Does the plan show understanding of composition principles (balance, focal points, visual flow) and explain how to implement compositional elements? Is the proposed plan for completing the unfinished drawing logical, detailed, and stylistically consistent? Would an artist be able to follow this plan to create a coherent completion? Provide a single quality score (1-10) where: 1-3: Poor (vague, inaccurate, or impractical), 4-6: Average (basic analysis with some useful elements), 7-8: Good (comprehensive analysis with clear, actionable insights), 9-10: Excellent (expert-level analysis with sophisticated artistic understanding)”

(the evaluation prompt)

I rethink the importance metrics too. Resampling importance (which measures how much performance drops when you regenerate from a given chunk onward) still works conceptually, but I need to replace the accuracy measurement with quality variance—instead of counting correct vs. incorrect answers, I'm now measuring the spread in GPT-5 quality scores across rollouts. Counterfactual importance (which works by swapping a chunk with a "similar but different" alternative to measure its causal impact), however, doesn’t seem reliable for my use-case: I can’t think of a clean way to find "similar but different" creative insights the way you can swap mathematical approaches (substitution vs. elimination). Creative reasoning is more of a continuous narrative thread—you don’t think you can swap one interpretive move without unraveling the whole analysis. Similarly, forced answer experiments (which anchor the model on a wrong answer early to see if it propagates) don't apply either since there's no "wrong answer" to anchor on in subjective tasks. So I keep resampling as the core metric but drop counterfactual and forced answer analyses from the creative pipeline.

The Results: What the Surrogate Revealed

With the adapted pipeline ready, I run the full black-box analysis on the task of having GPT-5 generate a creative plan to complete my unfinished drawing. Watching the results come in is revealing. I’m observing a few patterns.

The strongest anchors, it turns out, are highly specific technical directives. The top positive anchor (+0.032 importance) is the value plan specification: "Lightest lights: highlights on the three faces; mist around them; rim light on Baku's trunk; bulbs on the Wonder Wheel/Parachute Jump." While this number may seem small on a 0-1 scale, it's statistically significant—about 2 standard deviations above the mean (σ=0.0162), meaning rollouts that preserved this chunk are 32% more likely to receive "good" quality ratings. This sentence acts as a critical constraint that keeps all subsequent creative decisions on track. Similarly, broader instructions like "Keep them sparse so the image stays elegant and B/W" (+0.030) and form specifications for the Baku creature (+0.026) show high importance. It seems the model thrives on clear, architectural constraints.

What surprises me is the disproportionate influence of emotion. While only 3 out of 49 chunks (6%) are categorized as "Emotional Interpretation," they average +0.007 importance—higher than expected. The most impactful is a sentence specifying Baku's expression as "protective and tired rather than threatening" (+0.026), which appears to anchor the entire mood trajectory of the resulting artwork.

Interestingly, over-specificity hurts quality. The most harmful one, with a score of -0.052 (or ~3.2 standard deviations below the mean, quite significant), is an overly detailed texture description: "short, velvety fur on head; subtle wrinkles and pores on trunk; use kneaded-eraser lifts for rim lights..." It seems that when the model commits early to this level of granular detail, its creative exploration becomes constrained and less coherent. Similarly, micro-level instructions like "Add tiny bulb highlights...keep them irregular" (-0.020) reduce overall quality, suggesting they box in the process prematurely.

1. [Technical Analysis] Score: +0.032

“Value plan (make a 3–5 value thumbnail first) - Lightest lights: highlights on the three faces; mist around them; rim light on Baku’s trunk; bulbs on the Wonder Wheel/Parachute Jump.”

2. [Technical Analysis] Score: +0.030

“Keep them sparse so the image stays elegant and B/W.”

3. [Technical Analysis] Score: +0.026

“Baku - Form: tapir/elephant hybrid as in the sketch; do not morph into other creatures.”

4. [Emotional Interpretation] Score: +0.026

“- Expression: protective and tired rather than threatening.”

5. [Technical Analysis] Score: +0.022

“Render spokes and gondola silhouettes selectively; break lines with fog so it doesn’t overpower the faces.”

This leads to one of my main findings: while "Technical Analysis" dominates the plan (making up 80% of the sentences), its overall impact is neutral. What matters is the kind of technical instruction. High-level constraints (like value plans and composition) are helpful, while premature, low-level detail is harmful.

The quality variance across the different generated plans is remarkably low and consistent. This is fundamentally different from a math problem, where one wrong intermediate step can cascade into a completely wrong answer. In this creative task, even when the model is forced to resample from a weaker part of the plan, its overall quality remains stable. It seems that creative reasoning, at least for this model, is more resilient and less path-dependent than logical deduction.

The result is a text plan to complete my sketch, with its most influential steps now identified.

...Mood and concept - Melancholic intimacy with NYC-as-creature: Coney Island appears alive; the Baku protects/consumes dreams while the figures drift between wake and sleep. - Quiet, reflective dusk feel achieved only with values (no color). 2) Composition and placements (lock these before rendering) - Three faces stacked vertically, following the sketch: - Top profile facing left, partially overlaid by the Wonder Wheel halo. - Middle face in 3/4 looking up/right (primary focal point)...

Painting the Sound

With the plan in my clipboard, it is time to execute. I feed the detailed plan back to GPT-5 along with my original unfinished sketch to generate the completed image (this time the model is no longer able to see my reference finished work). Even with the best instructions, a straight, one-shot generation rarely feels right. I run the same prompt a few times and find the outcomes are remarkably consistent, with only minor variations between each rendering. My role now shifts to that of a curator, or the judge with a taste as Sesh would say, as I select the final rendering.

I then apply hue changes and paint over details in Adobe Fresco to better represent my style.

This brings me to the next planned phase of the experiment, which is designed to directly confront the question of fulfillment. The original debate on that cold beach wasn't just about making art; it's about the feeling of making art. My hypothesis is that perhaps even if I delegate the physical "doing," I might be able to reclaim that lost tactile connection by creating a new sensory feedback loop. The plan is to create a post-hoc sensory artifact that represents the creative flow. This is another facet of the neural surrogate concept. If the text plan represents the surrogate's rational "thoughts," then translating those thoughts into music could represent its emotional undercurrent. I should be clear: music is not my area of expertise. This step isn't about delegating a skill I already have, like drawing. It's an intentional choice to add a new dimension to the experiment—a form I can feel but couldn't create myself. I take the top five most important thought anchors and feed them as a prompt to Suno.

The result is unexpectedly joyful. The act of translating the artwork's conceptual core into sound is deeply captivating. I get carried away, generating song after song, constantly listening, comparing, and selecting the "better" one. The whole time, I am processing the artistic concept in the background, seeing how a phrase like "a small cluster of magenta to anchor the side" could be interpreted musically. It feels less like delegating a task and more like a conversation.

Now, there's one final step to bring the entire digital journey back into the physical world. I have these songs, all born from the same conceptual seeds but different in their execution. I'm curious if there's a common thread, a musical pattern that unites them. I write a Python script to find out. I use CLAP 512-dimensional embeddings and cosine similarity to analyze the audio and identify the single passage from each song that is most similar to a passage in the others. The script finds them—a few seconds of audio from each track that represent their shared core. I visualize these passages as simple waveforms.

With the image now printed on canvas, I take out my acrylic paints. I'm not just adding random details; I'm using the shapes of the sound—the visual echo of the LLM's most important thoughts—as inspiration to add the final, physical touches, painting the sound onto the image.

Pondering the Bridge: Reflections on Minds

This feeling—this surprising sense of connection and fulfillment from the process—makes me want to find a language to understand why this multi-step, mediated workflow feels so different from a simple, one-shot generation. I start digging into neuroscience and cognitive science, not as an expert, but as an artist and engineer trying to piece together a map for this new territory.

The workflow is a clear example of cognitive offloading, but the constant back-and-forth felt more like distributed cognition, where the thinking is partitioned across a partnership. My experience curating the AI's plan and the Suno outputs is this in practice; I'm not just passively offloading work, I'm actively interrogating the process at multiple, meaningful checkpoints. This strengthens my Sense of Agency—the feeling of being in control.

The surprising joy I felt generating the music makes me wonder about the neural mechanics of fulfillment. When creating manually, the connection between a thought and the immediate sensory feedback forms a powerful loop based on the principle of Hebbian learning—"neurons that fire together, wire together." With the neural surrogate, that direct loop is broken. But perhaps a new one is being formed. My research points to a concept called Dopamine-Modulated Hebbian learning. The idea, as I understand it, is that dopamine acts as a "teaching signal" for the brain's reward system. A positive outcome can trigger a dopamine release that retroactively strengthens the neural connections that led to it. The act of listening to the music while consciously reflecting on the thought anchors that generated it feels exactly like this. The rewarding sensory experience acts as the teaching signal, strengthening the association back to the original, abstract plan, forming that "fire together, wire together" connection across a temporal gap. The music, generated via ideasthesia (a concept evoking a sensory experience), becomes a mnemonic device, a sensory anchor for the GPT-5's abstract plan, re-engaging my brain's dopaminergic system. This process is a series of discrete sub-goals, each offering its own opportunity for a rewarding "win": the moment a coherent plan is generated, the satisfaction of identifying the key anchors, seeing the the LLM execute the plan faithfully, and the surprising joy of hearing the system generate a musically pleasing artifact from my ideas.

This brings me back to the feeling itself. The joy and deep engagement from the process demand an explanation. In art, the pinnacle of this feeling is the classic "flow state." So, I start to wonder how my experience as a supervisor compares. One leading theory, transient hypofrontality, suggests that flow involves a quieting of the prefrontal cortex, the brain's "inner critic." This works for experts who can rely on well-practiced, automatic skills. My role here is the opposite: I'm a supervisor, a creative director, and my prefrontal cortex is highly engaged in evaluating and guiding the AI. This points to a different kind of mental state, perhaps what could be called "supervisory slow," where deep immersion arises from successfully orchestrating a complex, external system, not from uninhibited doing.

This exploration leaves me with sharper questions. Is pre-existing expertise a prerequisite for this fulfillment? My intuition says yes. Part of the satisfaction I feel comes from the confidence that, given enough time, I could have done this myself. My expertise allows me to delegate strategically. Without that confidence, born from years of practicing my "skill of origin," would the fulfillment be less? This creates the ultimate paradox: how do you gain initial expertise if you constantly offload the work? And how do you maintain your foundational skills?

The answer may be two-fold. One, it lies in redefining the work itself. My expertise in this workflow isn't just in the fine motor control of my hand, but in my ability to act as a creative director. These are new skills—strategic prompting, critical evaluation, system orchestration—and this process (or something like it), with its constant feedback loops, may become a dedicated training ground for developing them. Two, as Karpathy speculates in that same podcast, people in the future may want to exercise their "cognitive muscle" in the same way they go to the gym, because it will still be a mark of distinction. That's a hopeful thought. It suggests a future where the role of the artist evolves from a craftsperson to a creative director, and expertise shifts to a new, demanding set of curatorial skills.

Future Directions

This project feels less like an answer and more like the beginning of a new set of questions. The experience was fulfilling, but it also made me think about how much deeper this collaboration could go. I find myself thinking about what comes next, not just as technical upgrades, but as ways to further explore that bridge between human thought and machine process.

And why stop at sound? The principle of translating a conceptual "thought anchor" into a sensory output could be extended. My friend Aliaksandra, a sensory expert at Osmo, introduced me to their fascinating work in this area. As I understand it, their AI uses a "Principal Odor Map"—an embedding space linking a molecule's structure to its perceived scent—to translate high-level concepts directly into a chemical formula. This opens up an incredible possibility. A thought anchor like "a bold, aggressive stroke" could be translated into a text brief, like "a sharp, citrus scent," which would then query their map to find the corresponding molecules. This creation of a multi-sensory "process-scape" could make the AI's logic even more tangible and memorable.

This project is an evolution from a simple question on a cold beach. The goal is no longer just to reconcile art and technology, but to design a partnership that is not only productive, but also deeply fulfilling. It’s a bridge from one to the other—a way to embrace technology without losing our own voice, and to find, in the process, a new and unexpected kind of joy.